In a previous article, we discussed the importance of designing chatbots with care. To recap: Careless chatbot designs reinforce negative gender bias and stereotypes. This causes problems in both the digital and physical world, which is why it’s essential to design chatbots with gender equality in mind.

Enter TechnoFeminism

TechnoFeminism, a.k.a. Feminist Technoscience, is a transdisciplinary study of science that came about after decades of gender inequality criticism against science and technology sectors’ lack of gender equality representation.

Through TechnoFeminism, we can see how gender shapes technology and vice versa.

Introducing the Feminist Design tool

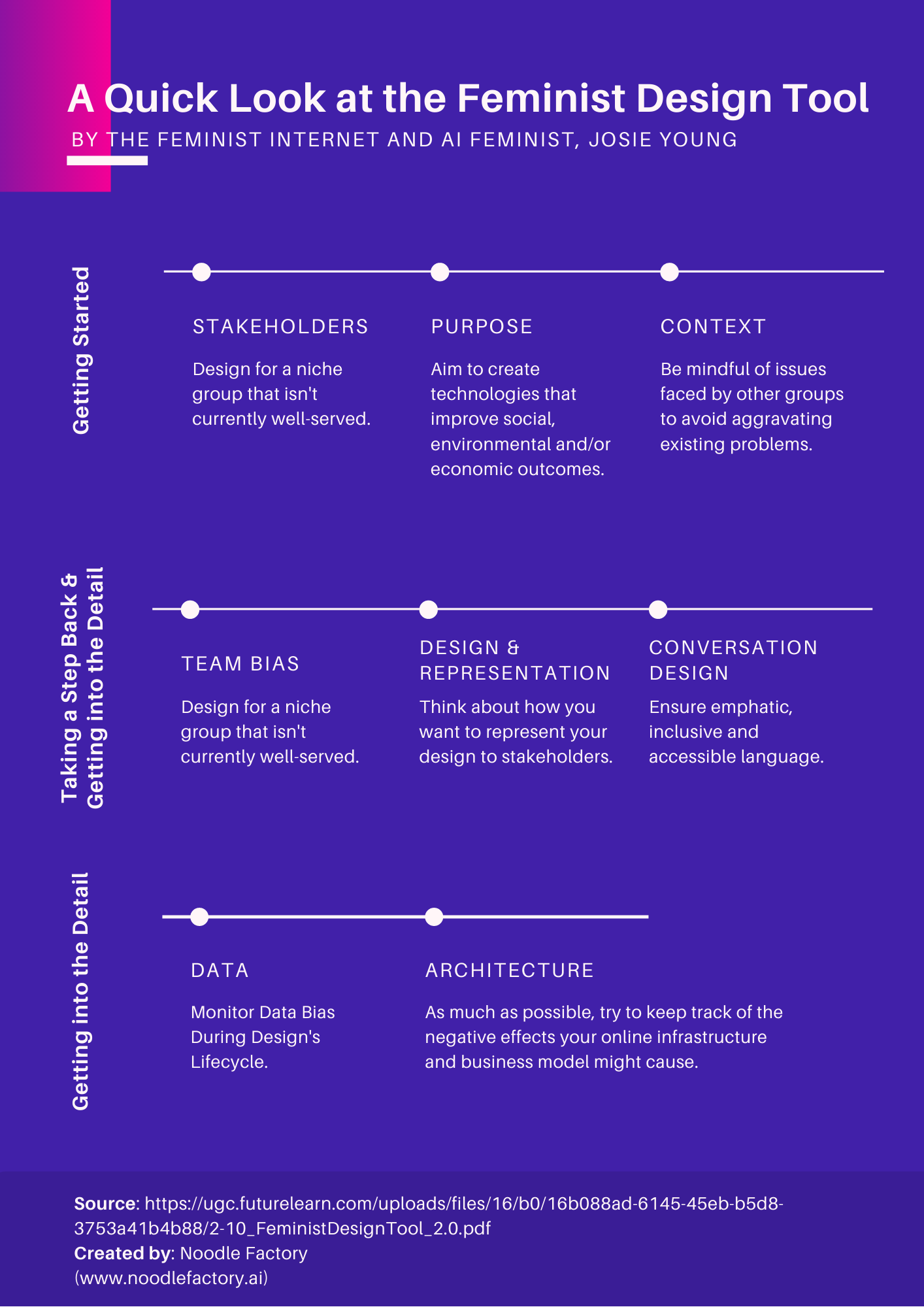

The Feminist Internet and AI Feminist, Josie Young, specially curated a tool that helps you build feminist chatbot designs. The eight-step guide brings you through the planning, building, and design process.

Here’s a look at the Feminist Design tool

Getting Started: Stakeholders

The ever-popular idea of ‘universal users’ sounds great when you think of designing for everyone, but is flawed when you realise that the needs of specific user groups are overlooked in favour of the masses.

Instead of designing for everyone, designing for a niche group that isn’t currently well served increases your chances of designing something that truly works for everyone. Case in point: accessibility tools such as Magnifier and VoiceOver.

Getting Started: Purpose

Technologies are built for many reasons that can have positive or negative consequences. You should aim to design technologies that improve instead of damage any social, environmental, or economic outcomes.

This means you have to clearly define your creation’s purpose and evaluate its benefits.

Getting Started: Context

As technology sits together with political, social, economic, cultural, technological, legal, and environmental power dynamics, you should be mindful of issues faced by other groups to avoid aggravating existing problems.

For instance, minorities still face difficulties when using voice activation because most virtual assistants are unable to understand their accents.

Taking a Step Back: Team Bias

As products of our upbringing, interactions, and experiences, our individually-shaped thinking and perspectives can affect us unconsciously.

If you don’t reflect on your bias, you run the risk of reinforcing negative stereotypes, which is harmful to stakeholders.

Getting into the Detail: Design & Representation

Design and representation of AI agents make or break stereotypes. For example, characterising financial chatbots as male reinforces the stereotype that men are more financially capable than women.

Think about how you want to represent your design to stakeholders.

Getting into the Detail: Conversation Design

Since conversation is usually the primary interface between stakeholders and design, dialogue must be crafted carefully. This is how they decide if your design is effective and if it discriminates against them.

A feminist approach to conversation can refer to emphatic, inclusive, and accessible language.

Getting into the Detail: Data

Datasets’ biases can negatively impact your design and stakeholders, so it is important to monitor data bias during your design’s lifecycle.

Getting into the Detail: Architecture

The complex physical ecosystem of physical infrastructure, hardware, software, and human labour is often hidden, making it hard to keep track of its negative effects or exploitation.

It isn’t always possible to have a fully feminist architecture, but it’s important to take the necessary steps towards it.